For the second year in a row, Seth Isaacson led the Beaver Robotics team – BeaverAUV – in building an autonomous robotic submarine for the 2017 International RoboSub competition. In addition to helping design and create the robot itself, Isaacson – who is currently attending Harvey Mudd – also helped secure several sponsorships for the team. Below are his reflections on the competition and the year as a whole.

Written by Seth Isaacson ’17

I sat down for an hour to write this blogpost and, as is typical for me, it got pretty long. So I realized I should add a brief forward:

In a nutshell, RoboSub 2017 was awesome. We learned a ton. The technical knowledge gains were huge – at least as much as last year – and we also got much better at effectively working together. We made some mistakes in terms of careless design and bad project management, but they all taught us a good lesson. While we didn’t accomplish any more in terms of the score line than last year, we built a better, more capable robot. If we had another year, we’d be in an awesome position to have one of the best robots in the competition.

Now, for the long version:

In many ways, this year of RoboSub was an eerie repeat of the 2016 competition. We finished between 22nd and 25th place, and we made it through the qualification round by autonomously navigating both to depth and forwards about 15 meters through the gate. This took us to the semi-final round, where, just like last year, we started to have issues. In our first semi-final run, we didn’t get off the starting dock (I’ll get into the details about that later), and in our second semi-final run, while we got through the gate, we’re still unsure if we managed to hit a buoy (one challenge in a competition like this is figuring out exactly what the robot is trying to do at what time).

This same EXACT thing happened last year.

And it’s ironic because after the 2016 competition, we as a team were convinced we had come up with “perfect” solutions to all of the issues we encountered. We were incredibly inspired and super excited to get to work on building a championship robot for 2017.

We wanted to:

- Develop all new hardware that looked much better;

- Have an easier to work with robot with easily serviceable electronics and easily swappable batteries;

- Use an extremely powerful computer for neural-network based vision tracking so that we could master all vision-based tasks;

- Add a dynamic state machine for mission control logic so we can use multiple mission profiles and make rapid changes to top-level mission logic (more below);

- Add torpedos, because they’re cool and should be doable with our awesome vision system;

- Try to develop a sonar system to navigate to the acoustic pinger.

So those were our goals. And did we accomplish them?

Let’s break-it down.

Did we …

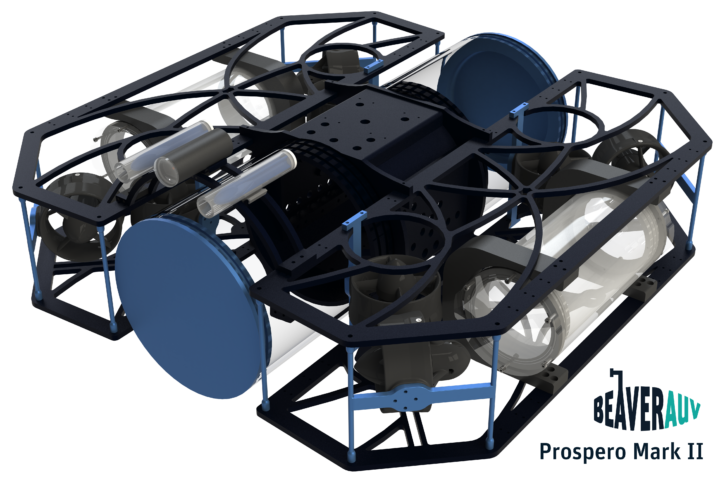

… develop all new hardware that looked much better? Done and done!

All hardware was fully replaced, and (not to congratulate myself too much) I think it looked pretty awesome. (Editor’s note: It looked very awesome.)

… have an easier to work with robot with easily serviceable electronics and easily swappable batteries? Well, Yes and no.

In one sense, we absolutely did. We developed a (mostly) custom electronics infrastructure to facilitate all the communication within the AUV. It was a challenging project, but it was a lot of fun and we learned a lot. We did this so we could plug in different circuit boards to our electronics system and be able to individually remove each board one at a time for modifications. While we accomplished this, we didn’t think through the design quite well enough. This meant there was still a massive spiderweb of cables everywhere (not great for usability).

The other thing is we made the classic engineering mistake of having a system that mostly worked, adding a bunch of new stuff, and making everything smaller. We added a lot of new, large hardware this year, but the internal volume of the sub was much smaller. That doesn’t add up. You can probably tell from the images there was not a cubic centimeter of space to spare. That’s a mistake I won’t be making again anytime soon.

The swappable batteries were actually very nice. We could remove each battery one at a time without powering off the sub, which made it possible to have the sub constantly running. It also meant we didn’t have to open the main pressure vessel to swap a battery.

… use an extremely powerful computer for neural-network based vision tracking so that we could master all vision-based tasks? This was simultaneously the greatest success and the biggest failure of the year.

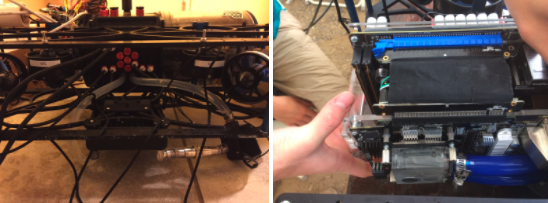

We put a very, very powerful computer in our sub – more powerful than any of our laptops or personal desktop computers (tech specs: intel i5 CPU and an nVidia gtx1060 GPU). That created a LOT of challenges. The main issues were a) the computer completely filled up half of the hull with little room to breathe, and b) computers produce a lot of heat, and if that heat can’t go anywhere, problems arise. This basically meant we needed some system to transfer the heat the computer produced from inside the tiny plastic tube to the very large mass of water the robot operates in. To do that, we water-cooled the computer: We pumped water across specialized parts that sit on the main processors to suck the heat out of the processors and into the water. Then, we moved that water out of the hull and through a radiator to diffuse the heat into the water. This is called a “closed-loop system” because the water inside the tubing always stays inside the loop, and the water or air outside the system stays out. Just making all that hardware fit was very hard, but also a lot of fun and very rewarding when it actually worked.

Here’s a picture of that system:

So why did we need a computer that powerful in the first place?

Well, we needed to be able to run our “machine vision” algorithms. The basic idea of machine vision is to teach a computer how to recognize objects through a camera. We used a specific type of algorithm called a “convolutional-neural network”, which simulates how a human brain works. Think about it like this: A computer doesn’t know what a buoy is, what it looks like, or how to identify it. So what we had to do is literally show our algorithm several hundred pictures of buoys. Then, we “trained” the system, which is an 18-hour long process where the computer goes through all of the images and tries to decide what a buoy is. Once it’s trained, if the computer sees a video of a buoy, it can find it and tell us where it is. It’s really cool!

But – as you know if you followed us on Twitter – we ran into a lot of issues with this system. First of all, the water cooling pump broke. With no replacement, we had to improvise. First, we had the idea to get a bilge pump from the local boating store. A bilge pump is used to drain a flooding boat. It sucks up water from its environment and pumps it through a tube. But this meant we had to adapt our system to be an open-loop water cooling system: We would pump water in from the pool, across the processor, and then exhaust it back into the pool. While this worked great in the water, it wasn’t as great in air since there was no water to suck in, and no water = absolutely no cooling. This is REALLY bad for computers. (You can see some hilarious pictures on Twitter of how we kept the pump submerged.) The issue became a serious problem when the computer overheated and turned itself off while we were loading it into the water for our first semifinal run. That meant we couldn’t do anything for the whole run. Luckily, my teammate Dan Bassett ’17 came up with a pretty funny and clever solution: He put a water bottle over half of the bilge pump, which we could use as a mini reservoir of water, turning it back into a closed loop. That worked great (enough), and did the job. Plus, the judges and sponsors all loved the creativity.

All these issues aside, the reason vision tracking was a disappointing failure was because despite how awesome our computer and algorithms were, we still couldn’t detect the objects under water. The annoying reason why? Our camera just wasn’t good enough. We used a really cheap webcam encased in a clear epoxy, which just didn’t cut it. Now I know why teams use $700+ cameras.

Moral of the story: Test early, test often. This is a mistake that could (and should) have been prevented with extra testing. It’s a valuable (albeit disappointing) lesson to have learned.

… add a dynamic state machine for mission control logic so we can use multiple mission profiles and make rapid changes to top-level mission logic? Success.

Last year, we didn’t seriously start work on our state machine until a few weeks before the competition, and because of that, it was terrible. This year, though, we had one of the better state machines in the competition.

A state machine is a program that allows the robot to easily switch between multiple different “states.” Each state could be anything. For example, we used one state per main task. Within that, each sub-routine could be its own state – achieving the proper depth, scanning for a buoy, etc.

With so many different tasks and actions to switch between, it can be difficult to manage all these transitions, but my teammate Oliver Geller ’17 built a cool system that made this very easy. The lesson we learned: Starting software at the beginning of the year pays off.

… add torpedos, because they’re cool and should be doable with our awesome vision system? Sadly, no.

We built torpedos as well as a pneumatics (compressed air) system to actuate them. However, integration would’ve been a big challenge for three reasons:

- We didn’t have space for the hardware inside the hull. Oops! That’s just bad planning. More careful design could have gotten around that.

- The software to be able to use torpedos is very challenging and requires working sonar and vision code. That wasn’t going to happen.

- This reason is a bit less intuitive. You see, to launch the sub, you suck air out of the hull in order to put a small vacuum in the pressure vessel. This external pressure ensures the end caps and hulls stay rigidly attached. Adding pressurized air lines through the hull would’ve introduced a HUGE risk. If one of those lines leaked, we all of a sudden would have positive pressure inside the hull. This could have pushed the end caps all the way off, and completely flood the hull – which is about the worst thing that could’ve happened to us as that would’ve ruin all our electronics. Pneumatics stuff always leaks, so the only way to do it safely(ish) is to have all the pneumatics in a secondary hull.

Lesson learned: do your research and plan more carefully.

… try to develop a sonar system to navigate to the acoustic pinger? We tried, but we didn’t quite get it done.

Sonar is really hard. Basically, there’s a pinger (speaker) underwater that makes a very high-pitched chirp every once in awhile. You have to listen to that signal on the robot and figure out where it came from using hydrophones (underwater microphones) and then navigate to it. However, even just acquiring data from the hydrophones proved to be a challenge.

Plan A was to design a custom data acquisition circuit board, which I did, but it didn’t work. So then we spent a lot of our budget on a pre-built board, but we also couldn’t get that to work. By that time we had to cut the cord.

I was actually really happy with how well we were able to decide to give up on this part of the project. It may sound weird, but one lesson we took from last year was how to prioritize and recognize when a system was failing. From our experiences, we were able to decide to spend our time on systems that might have a chance of working. That was a definite improvement over last year.

SO WHAT DID WE LEARN?

Here are three lessons I learned from RoboSub that apply not only to engineering, but also to life:

- Walk before you can run. And you should be really, REALLY good at walking before you even try jogging. We were crazy ambitious for our second year, and it likely kept us from reaching most of our big goals. If we had slowed down and approached each system one at a time, we could have accomplished more.

- Don’t over-engineer. Going into the year, we had tons of great ideas and we were justifiably eager to implement them. However, it might have made sense to stick with the flawed 2016 design, made some improvements, and only majorly overhauled the electronics and software. If we had done that, we may have been able to start testing in December rather than July (mere weeks before the competition).

- Attempt not to fall victim to the “dunning-kruger” effect. Confidence is valuable; uninformed cockiness is dangerous. This year, we as a team often straddled the line between the two, and there were several times when we were all collectively a bit overwhelmed by the magnitude of the challenge. A specific moment that comes to mind is when we were gearing up for our first day of pool testing on the very day we had to ship. That was scary!

So while I do think we could’ve done more, I also want to be clear: I’m very happy with how the year went and proud of all we got done.

Good luck to the team at the 2018 RoboSub competition!

RELATED